AI thinking out loud -

reimagining how we communicate with technology and each other.

As an information and communication technology Prof dabbling in AI, I got excited by the opportunities of using regular English prompt engineering signified by Andrej Karpathy’s viral exhortation that “English is the hottest new programming language”. Why is talking to and with machines in natural written and spoken English disrupting tech investment and the long held scientific structures of Communication Science?

Communication Science explores how humans create, transmit, and receive messages, focusing on both individual and societal levels. It encompasses a wide range of topics, from face-to-face conversations to social media communication, and examines how communication shapes relationships, cultures, and societies. But now we must include a brand new dimension: conversations with machines.

This has driven the rise of prompt engineering as a highly paid career. To do the work well doesn’t require any software programming skills. The best prompt engineers will have a combination of both analytical and creative skills that they apply to generating word prompts. As I’ve argued before, to get the best out of AI you need to write and speak well.

However, specificity and context are as important as how the words are strung together in a prompt. And subject matter expertise is becoming a necessity. If we imagine company-specific LLMs that are trained on a repository of knowledge or industry-specific language, a deep understanding of that space will be critical to extracting the desired outputs from the AI.

DeepSeek, particularly its R1 model, has sparked significant discussion and reassessment of investment opportunities by demonstrating that high-performing language models can be developed with significantly lower costs than previously assumed, potentially challenging the dominance of established players like OpenAI, Meta and Nvidia.

DeepSeek works by creating a chain of responses to copy the process of thinking aloud. At each stage it picks the best option before proceeding on to the next, which results in a much stronger final output. By analogy it’s like the difference between writing the first thing that comes into your head during an exam and planning your essay before you begin. Chain-of-thought (CoT) prompting encourages LLMs to solve problems by breaking them down into intermediate steps, mimicking human reasoning, rather than directly jumping to an answer.

Implications for investment.

There are two consequences of the work done by the DeepSeek team. First, the group found a way to make the process of building the underlying model much cheaper. Although the $US 5M price tag dramatically understates the actual cost by ignoring spending on labour and hardware, the model still represents a major improvement in efficiency. Second, DeepSeek was able to get to market quickly and share an open source version of its model.

Cos it comes from China

The underlying approach that made DeepSeek-R1 a success wasn’t new. Chain of thought reasoning has been known to American AI labs for some time. So how is it that the Chinese lab seems to have released the first publicly available model first?

It comes down to risk appetite.

DeepSeek users admit it does make mistakes and, based on Open source code, is capable of taking control of your computer. Like TikTok, DeepSeek R1 contains Chinese government censorship within its training model.

The US AI companies recognition of these dangers means they cannot release a model that entails significant risk. They have much more to lose than the founders of DeepSeek, who had to stockpile 10,000 NVIDIA A100 chips for US$200 million, anticipating U.S. export bans on the technology.

DeepSeek is happy to take a calculated bet: that its flagship model can be released to a sufficiently small number of users over time, and that feedback from these users can be tapped to make it less likely to cause mayhem in the future.

What is the big breakthrough in communication?

This revolution comes from two key innovations. First, the shift from cascading architectures (speech-to-text → text processing → text-to-speech) to direct speech-to-speech models eliminates intermediate processing stages that previously slowed conversational AI interactions. Second, a sizeable reduction in latency and cost. When OpenAI initially released its Realtime API, the price made it impractical for widespread adoption (around $18/hour). Four months later, Google's release of Gemini Flash 2.0 and OpenAI's 60% price reduction opened the floodgates for affordable and human-like voice AI applications.

We are mortal beings, granted only finite time to learn anything. The human brain is complex and powerful, but we each are allotted just one of them, so our computational capacity is bounded. And we have no way to directly transfer the contents of our brain to that of anyone else — our shared knowledge has to pass through the bottleneck of our limited communication ability.

In contrast, the “lifespan” of an AI program is indefinitely long, improving the program through ‘endless’ learning. Concurrently, computing power is continually increasing as engineers build faster machines with greater memory and processing capacity. Huge networks of computers can be put to work on a shared problem.

What are the remaining challenges?

Unlike human phone conversations, where near-zero latency and natural turn-taking make interruptions manageable, AI interactions often feel clunky when users try to interject. Interestingly, humans tend to interrupt AI more frequently and aggressively than they would other humans, creating a new challenge for voice AI developers while creating a new interaction paradigm for human-AI conversation.

The social impact

This voice revolution raises profound questions about human interaction and relationships:

Could the instant gratification of interruptible AI conversations and the ability to be rude without consequences degrade our patience and interpersonal skills?

The convenience of always-available AI consultation might reduce our reliance on human relationships. We once relied on reading maps and asking locals for directions—skills now largely abandoned as we defer to GPS. Could meaningful conversations be next?

Could we soon have more conversational exchanges with AI agents than with human companions?

Self test: Would you rather rehearse a high-stakes presentation in front of a potentially judgmental friend or instantly consult a non-judgmental AI companion available 24/7?

What does this mean for our interpersonal relationships?

One of the most interesting challenges is in handling interruptions—a fundamental aspect of human conversation that AI still struggles with.

1. Oversensitivity to background noise (go to mute when not speaking)

2. Inability to distinguish between relevant speakers and ambient conversation

3. Lack of visual cues that humans use to anticipate and manage interruptions

Different cultures have distinct interruption patterns, politeness norms, and conversation styles. Today's systems currently do not account for these cultural differences, creating a significant opportunity for AI builders to develop models that adapt to:

· Cultural background

· Individual user patterns

· Contextual cues

· Historical interactions

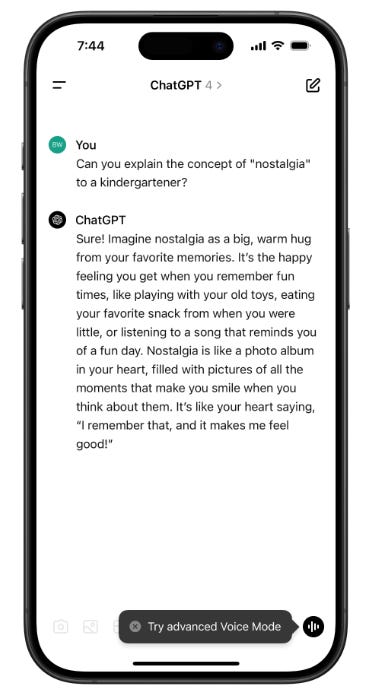

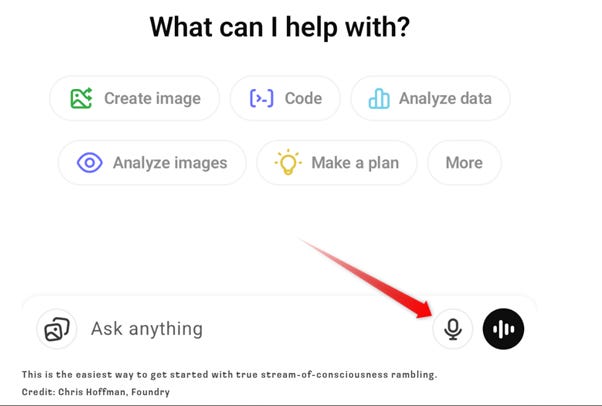

Many AI users still default to typing or speech-to-text instead of having natural conversations with it. Certainly there is a collective uncertainty about speaking naturally to machines. But more likely this a lack of awareness of the option shown bottom of frame below.

It only became available to free users last month, and many may not yet know how to use it.

Two years ago I argued that “To get the best out of AI you need to write and speak well”. The process of producing and exchanging meaning through dialogue is the fundamental concept of communication. In the construction of communication messages, humans and machines are now co-learners and co-travellers.

Image: Humans re now co-learners with co-travellers with machines.

To achieve optimal results from generative AI (GenAI) your need to externalise your internal dialogue. The more information and context, the better.

It’s a shift from the way we’re accustomed to thinking about these sorts of interactions, but it isn’t without precedent. When Google itself first launched, people often wanted to type questions at it — to spell out long, winding sentences. That wasn’t how to use the search engine most effectively, though. Google search queries needed to be stripped to the minimum number of words. GenAI is exactly the opposite. You need to give the AI as much detail as possible. If you start a new chat and type a single-sentence question, you’re not going to get a very deep or interesting response.

It is essential to articulate your thoughts clearly and provide substantial context. Unlike traditional search engines like Google, where queries benefit from brevity, interacting with GenAI thrives on detailed input. If you initiate a conversation with a brief question, you are unlikely to receive a useful response.

So, you aren’t performing a web search. You aren’t asking a question. You are iterating in a back-and-forth exchange where you provide comprehensive details and examine the AI's feedback. From there, you can identify any elements that pique your interest, delve deeper into those aspects, and continue the exploration.

You are co-discovering things with GenAI as your brainstorming partner.

Therefore, it is vital to adapt your prompting style; think of it as an ongoing dialogue rather than a simple question-and-answer session. This collaborative process resembles brainstorming, where you not only share your ideas but also reflect on the AI’s insights. As you engage more effectively, the quality of the responses will improve significantly.

Your experiment

As Chris Hoffman from Computer World suggests, try this, as an experiment: Open up the ChatGPT app on your Android or iOS phone and tap the microphone button at the right side of the chat box. Make sure you’re using the microphone button and not the voice chat mode button, which does not let you do this properly.

Strangely, the ChatGPT Windows app doesn’t support this style of voice input, and Microsoft’s Copilot app doesn’t, either. If you want to ramble with your voice, you’ll need to use your phone — or ramble by typing on your keyboard.

Chris explains:

After you tap the microphone button, ramble at your phone in a stream-of-consciousness style. Let’s say you want TV show recommendations. Ramble about the shows you like, what you think of them, what parts you like. Ramble about other things you like that might be relevant — or that might not seem relevant! Think out loud. Seriously — talk for a full minute or two. When you’re done, tap the microphone button once more. Your rambling will now be text in the box. Your “ums” and speech quirks will be in there, forming extra context about the way you were thinking. Do not bother reading it — if there are typos, the AI will figure it out. Click send. See what happens.

Just be prepared for the fact that ChatGPT (or other tools) won’t give you a single streamlined answer. It will riff off what you said and give you something to think about. You can then seize on what you think is interesting — when you read the response, you will be drawn to certain things. Drill down, ask questions, share your thoughts. Keep using the voice input if it helps. It’s convenient and helps you really get into a stream-of-consciousness rambling state.

Did the response you got fail to deliver what you needed? Tell it. Say you were disappointed because you were expecting something else. Say you’ve already watched all those shows and you didn’t like them. That is extra context. Keep drilling down.

You don’t have to use voice input, necessarily. But, if you’re typing, you need to type like you’re talking to yourself — with an inner dialogue, stream-of-consciousness style, as if you were speaking out loud. If you say something that isn’t quite right, don’t hit backspace. Keep going. Say: “That wasn’t quite right — I actually meant something more like this other thing.”

Now here’s the thing. The way the companies who offer these technologies are not properly showing you how to best use it. It’s no surprise so many smart currently turned off from using them.

If you understand how human communication works, you will know how to better how to use it effectively I suggest.

Let me know what your experiences have been and what you think.